Blog :: UMass CTF 2021 Postmortem

This was the first year our capture-the-flag event, UMass CTF 2021, was open to the public. The competition started Friday, March 26th at 18:00 EDT, and ended Sunday, March 8th at the same time. By the end of the competition, we had 1991 registered users, belonging to 1160 registered teams. No teams were tied, we had just one unsolved challenge, and each of the "harder" challenges had just one or two solves.

In this post, I will be reflecting on what we did well, and how we intend to improve for next year's UMass CTF.

But, before I begin, I realize that some may not know what a capture-the-flag (CTF) is. If you need an explanation, I will direct you to this article.

A huge thank you to the rest of the UMass Cybersecurity Club, especially those on infrastructure who worked around the clock to help me fix challenges. Thank you to my beautiful, loving partner, who helped me come up with the names and flags for quite a few challenges. Thank you to my loving parents, who hosted me while I worked on this, my degree, and my research. Thank you to my best friend, who made some challenges when I mentioned the competition to him.

Table of Contents:

Challenge Design

I'll get one thing out of the way: writing challenges will take much more time than you're expecting it to. I was asked to come up with ten or so challenges about three months before we were supposed to go live. I thought I'd be able to get them done over the course of a weekend before spring semester started. In reality, I was working on challenges up to the day of.

Below are some thoughts on the challenges I made, from what I would consider "most interesting" to "least interesting". Nearly every challenge had something go wrong with it.

replme (and replme2)

This was my favorite challenge.

Writeups:

- Janet v1.1 REPL Sandbox Bypass (by Aneesh Dogra)

- UMass CTF 2021 - replme [pwn] (by TheGoonies)

- UMassCTF'21 replme writeup (by Chai Yi Chen)

Older versions of the Janet programming language had a vulnerable NaN boxing

implementation. An attacker capable of running arbitrary Janet code could write

specific bytes to a float64 object, resulting in type confusion.

The challenge presented itself as an online REPL for Janet1 that

blacklisted functions dealing with the filesystem. The blacklisting was done by

overwriting the functions with one that would error (i.e. (defn os/shell [&]

(error ...). The intended solution was to use the NaN boxing vulnerability to

create a cfunction object that pointed at the original implementation.

The reason there was a replme2 is that I didn't blacklist all of the relevant

functions. At least one team realized that they could call (slurp "flag.txt").

This is something that playtesting by the rest of the team likely would have

caught, but I'll save that for a later section. Sunday morning, I released a

fixed version. Some teams solved it instantly, indicating that they had either

found a different unintended solution, or that they had solved replme the

intended way.

babushka

A Python bytecode crackme. The challenge was titled "babushka" because the program is designed like a matryoshka doll. There is one entry point to a long chain of functions, each one unpacking and calling the next in the chain.

Each of these functions does some check on the input. All of them, except for the fourteenth, have some check that involves a decoy flag, and some way of combining the results of the checks further down. They are combined such that only the output of one of the 500 functions is used.

The intended solution was to write a script to extract all of the functions, and look at the code for combining function outputs to see which mattered. You could then manually reverse engineer that function to get the flag.

The way the input is checked is about the same across all functions, so in theory, one could write a script to extract the value the input is checked against from all of the functions, and then try all 500 to see which one worked.

I programmatically generated and obfuscated the script that was given to teams. This challenge was fairly unremarkable, besides there being no writeups, and some people giving me flak because it didn't work on their version of Python.

Oh well. I had a lot of fun making it.

chains

Writeups:

- chains (by Chris Greene)

- UMassCTF 2021 - Chains [Reversing] (by Scavenger Security)

- Chains (by Arijeet Mondal)

This was an "optimizeme" challenge, one where the flag is generated by an inefficient algorithm. To get the flag in a reasonable amount of time, one would need to either patch the algorithm or reimplement it.

Here, the characters of the flag were encoded as very large numbers, and the program would, for each character, generate the entire Collatz sequence to see where that number occurs. There were some pretty clever solutions, but the one I used when I was playtesting was to memoize the Collatz function.

Oh, and to make it less reasonable for someone to let the program run for the duration of the competition, I compiled for AARCH64. I apologize to the handful of people who were unable to get an ARM environment set up.2

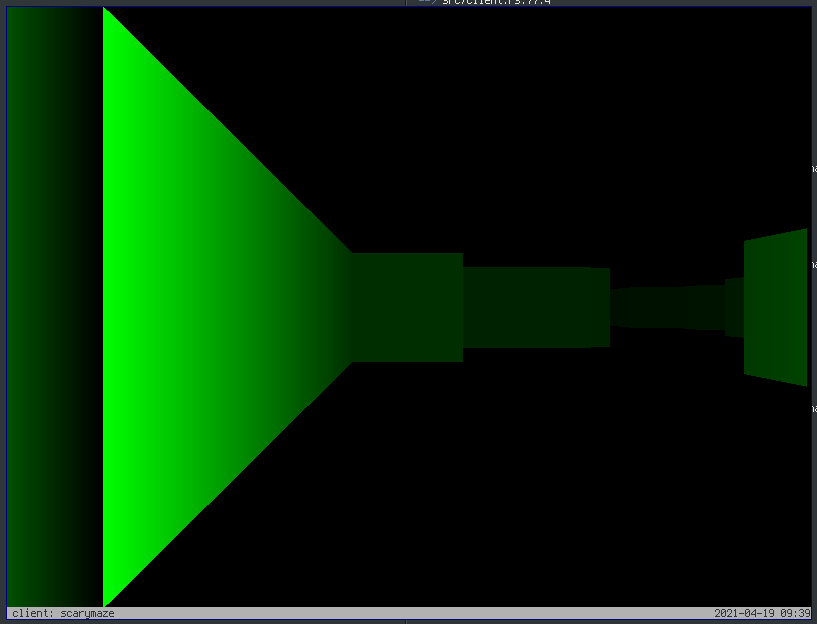

scarymaze

This was my second favorite challenge, even though a lot went wrong with it.

This was a networked maze game written in Rust, where the server would spit out the flag after 500 mazes had been solved. I spent a lot of time on the packet format and the renderer.

Communications between the client and the server were encrypted. The algorithm

was AES-128-CBC with the key STRINGS NOT HERE.3 I made things slightly

easier by using OpenSSL for the AES implementation, and slightly harder by

linking statically. If I'd linked dynamically, it would have been trivial to run

the client with ltrace. Static linking should've helped somewhat, since the

OpenSSL .a includes a ton of informative strings, but no one came close to a

solution until I'd re-released the client binary with symbols.

And then, in the last 30 minutes or so of the competition, two people had scripts, but the server was crashing around 200 mazes in. I couldn't figure out the bug in that short period of time, so I hastily re-deployed with a server that would spit out the flag after 50 solves. It was stressful.

Small lesson: networked games, where someone has to reimplement the protocol, should be somewhat tolerant to errors. In this case, the server panicked and closed the connection if there was anything wrong with the header, which I'll admit is bullshit.

stegtoy

The idea of leaking memory through a visual medium is neat to me, so I made a shitty BMP utility that encodes text into the least-significant bits of the image data. Source code wasn't made available during the competition, as I find leaking memory blindly to be more interesting.

This seemed to make it a very difficult challenge. Only one team solved it, which surprised me. BMP is simple enough that there are only so many things one could have tried. Anyway, the intended solution was to overwrite the "data start" pointer in some BMP file so that the tool would leak the heap data containing the flag.

warandpieces

Writeups:

- War and Pieces (by legallybearded)

- War and Pieces (by ugotjelly)

This wasn't one I had originally planned. I was in the grocery store one day and saw a bag of toy army soldiers for $1.99. I bought them because that was one of my favorite things to play with when I was younger. My mother, upon seeing them, asked if I had gotten them for the CTF, which gave me the idea.

The pieces came in 6 poses and 2 colors. I encoded the flag as hexadecimal, and assigned each combination of pose/color/orientation to a hexadecimal nibble. In retrospect, the challenge would've been more interesting had I treated the flag as a natural number, and written it in base-12 using the piece alphabet.

The flag was supposed to be UMASS{lil_t0y_s0lj4s}, but I made a mistake when

lining up the pieces: in the script I had made to help me line up the toys for

the picture, I had accidentally used the same color for two different digits.

Someone was kind enough to point it out, so we accepted the mangled flag

(UMASS{lfl_t0v_s0lj4s}). This wasn't a great decision, though, since you

wouldn't realistically be able to pull that out of list of guesses. I should

have just done the picture over again.

I'm hoping that next time, I won't be working on challenges in the week before the competition. Here, I had only playtested the first few steps because I was so short on time, hence this mistake falling through the cracks.

suckless2

Writeups:

I have little to say. Someone asked if I could recycle some of my challenges from last year, but I had already posted a writeup. I ended up modifying the challenge in a way that would render my exploit useless, and releasing that as a challenge. It was meant to be easy.

easteregg

Writeups:

- UMassCTF'21: easteregg (by Łukasz Szymański)

- easteregg (by Th0m4sK)

- Easteregg UMASS CTF 21 (by Hacklabor)

- Engenharia reversa - UMass CTF'21 - 'Easteregg' Writeup (by zapzap kkkkkkkkkkkkkkkkkkkkk '-')

Like warandpieces, this was not a challenge I had originally planned to make. One of the problem sets for the introduction to computer systems class this semester involved reverse engineering an ELF binary. In an effort to appeal to students who actually attend UMass, I was asked to make an easy reverse engineering challenge in the same vain.

I took that class two years ago, and I still had the programs I'd written for it, so I took one of them (a text adventure game), added an "easter egg" that you would need to reverse engineer the executable to find, and uploaded it.

This was one of the most solved challenges.

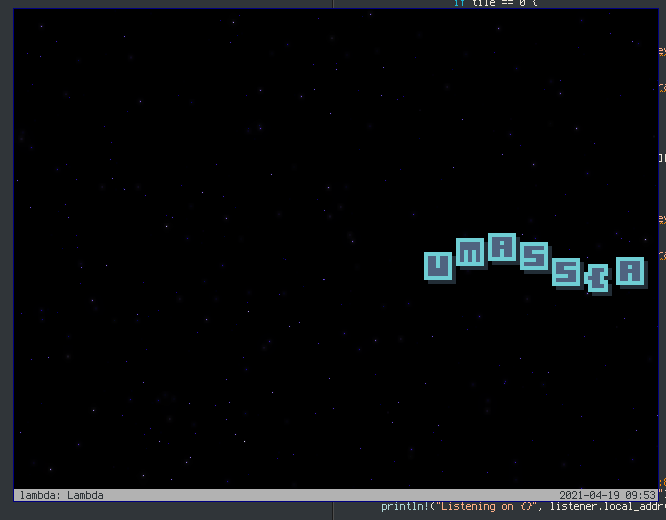

lambda

Next year, no more compiled Haskell.

I spent a lot of time on a cool sine scroller with one of my favorite mod files playing in the background.

And then I made the mistake of writing the key check algorithm in Haskell. This is a reminder that there's a fine line between "possible" and "reasonable". No one solved this.

When I'm done with school in two weeks, I'll give this my all, and give it a proper write-up.

Lessons Learned

Now, I'll share some specific morsels of wisdom that came to me after this very stressful weekend.

Playtesting a Priority

This might be seem obvious, but please humor me.

I made an effort to go through all of my challenges to see that they were reasonable, and I suspect this was the case for the rest of the team as well. It was better than nothing, but we could have done better in a couple of ways. I'll use three challenges as examples: 'replme' and 'warandpieces', challenges I had created, and 'pikcha', made by Steven.

The original "replme" was solvable by one function that wasn't blacklisted. I think a less-experienced player would have come across it, and we do have a handful of people on our team who are less familiar with the "pwn" category.

When playtesting "warandpieces", I only went through the first line or so. The error doesn't show up until much later. If I had either solved it in full, or had someone else playtest it, I would have caught the error.

"pikcha" included the answer in the session. LOL. I think if I had playtested it before we went live, I would have caught it.

These issues could have been caught if we playtested our peer's challenges.

This is what I have proposed to do next time: for every challenge, we choose two other people to playtest it. That way, there are three (if you include the challenge creator) pairs of eyes on the challenge. The only work on challenges happening in the last month should be fixing issues that come up during playtesting. Playtesters should also be tracking how much time they spend on each challenge.

Obvious Solutions Should Be Obvious

Often, a CTF will have an easy "entry" challenge that doesn't require any solving. The flag might be given in the challenge description. In our case, it was hidden somewhere in the communication platform we used. As an idea, it looks good on paper, but actually implementing the challenge was one of the biggest mistakes we made. We had nearly a hundred people spamming some variation of "/flag" or "!flag" into the chat, and a handful of people opening support tickets because they thought the flag was supposed to be obtained by talking to us.

A few minutes in, someone made the following joke:

<Youssef>: UMASS{WowIReallySuck...}

<ralpmeTS>: !flag

<Youssef>: I actually hate myself...

<Youssef>: I found the flag.

Besides 'UMASS{flag}', which is what we had in the message describing the flag format, 'UMASS{WowIReallySuck…}' was the second most popular incorrect answer for this challenge.

It was a mess. No more challenges like this.4

Challenge Update Transparency

When challenges went down, or were modified, announcements went through our communication platform. Not everyone saw those announcements, and some teams had an unfair advantage by getting both broken and fixed versions of the same challenge.

Next year, announcements will occur on the CTF platform as well. And hopefully, with our commitment to playtesting, we will not need to fix any challenges after going live, but we will ensure the old versions are still available if we do.

Matchmaker

Some people seemed to still be looking for teams in the week leading up to the competition, so I put together a little form where people would enter their experience, timezone, etc.

It was a good idea, but I think only about one team came about from this, since a lot of people either put bad contact information or flaked.

Next year, the matchmaking form will be available at the time we announce the competition, and the matchmaking process will be partially automated.

—

Footnotes:

It isn't really a REPL. Just a textarea for a Janet script, and a button that runs it, showing the output in a different textarea.

Someone mentioned being on mobile data, and that QEMU would have been prohibitively large for his plan. I felt terrible.

Surprisingly, nobody asked me about this. I thought this would have been a dead giveaway.

I honestly didn't like the idea in the first place, because I hate Discord and don't want to feel as though I'm forcing people to use it if they don't already. But I digress…

Comments (3)

https://mastodon.sdf.org/@jakob

@wasamasa I am worried about the testing fatigue, which is why I'm proposing a full month where no challenge development occurs besides playtesting. We have a sizeable team of people working as staff, too, so I hope we'd be able to distribute the work in a way that doesn't cause too much stress.I'll need to think about how to deal with the first point you've brought up. It might involve bringing in more volunteers, like you suggested, but it's a little hard to find ones with the right skills.

https://lonely.town/@wasamasa

@jakob I pretty much agree with your self assessment. Been there, done that. Although I didn't end up releasing my equivalent of a haskell challenge, I didn't feel comfortable with putting up something I couldn't solve myself, lol.You mention that playtesting is important. True, but it's like any other kind of testing. The more you have a person testing things, the more they become accustomed to the testing process and may end up unsuitable to perform any further testing for a number of reasons:- They learn what to avoid and what to do, thereby not testing things thoroughly. For example they'd no longer bother trying out things that may take a lot of time because they don't have much time to spend. - They become fatigued by testing, be it because they've already been designing CTF challenges or because they've been testing too much and started to hate it. Personally I'd recommend to take as much of a break as necessary the moment it becomes drudgery and ceases to be fun.From what I've read in other media about usability testing, the solution to this is spending lots of money to keep recruiting new testers and cycling them (hello Apple). This is expensive and not an option for most institutions, let alone groups or individuals doing this for fun. Some recurring CTFs are organized by different teams though...

https://mastodon.sdf.org/@jakob

I haven't submitted this to any site aggregators because I don't think this is particularly insightful or well-written. You're welcome to do so if you disagree with my judgement.

Leave a Comment